| Version 45 (modified by omamce, 5 years ago) (diff) |

|---|

Monitor, debug and relaunching

This section describes the monitoring tools, the tools to identify and solve problems, and the tools to monitor and restart the post processing jobs if needed.

Table of contents

Table of contents

1. Check status of your simulations

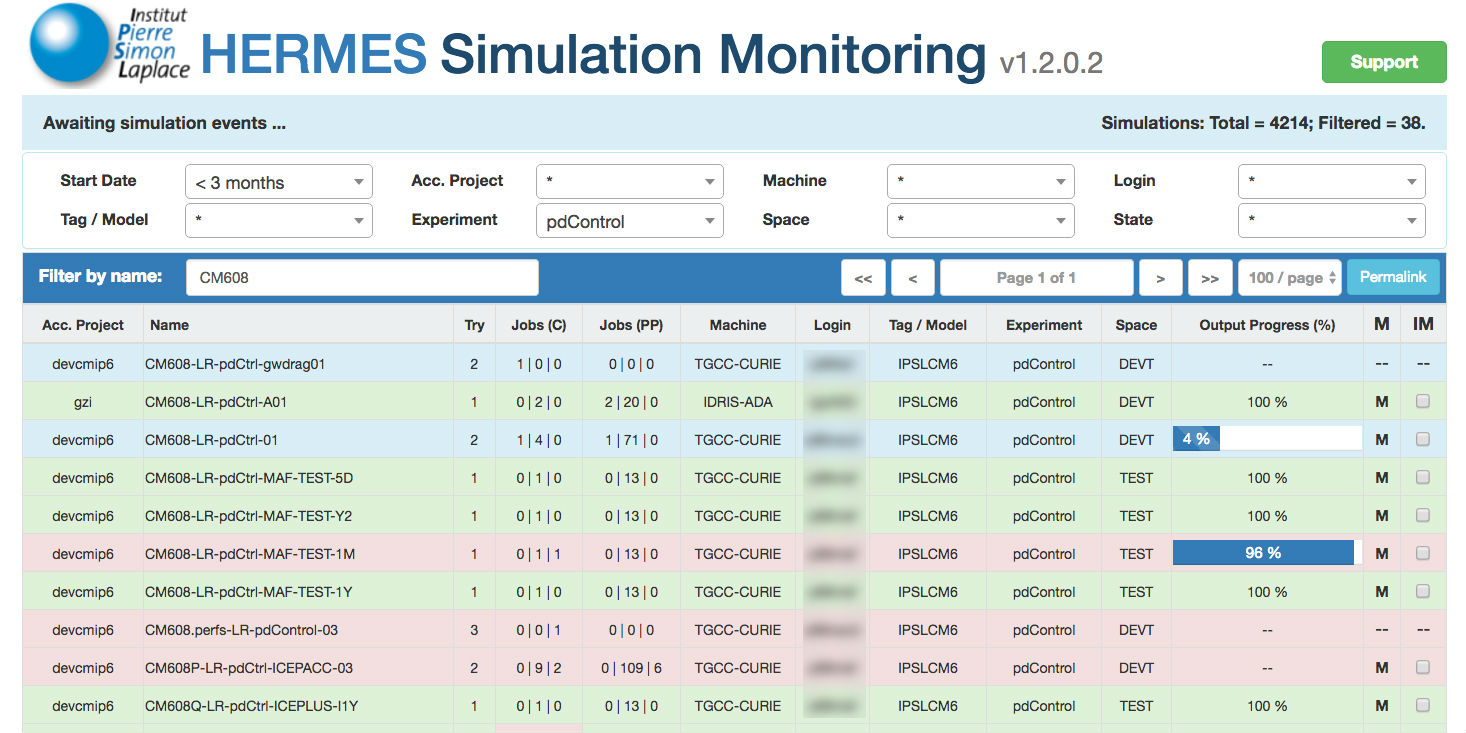

1.1. Supervisor : hermes.ipsl.upmc.fr

Since 2016, hermes.ipsl.upmc.fr is recommended to check and follow your simulations. Outside IPSL, you need a certificate to have access to it.

1.2. How to verify the status of your simulation

We strongly encourage you to check your simulation frequently during run time.

1.2.1. System tools

The batch manager at each computing center provides tools to check the status of your jobs. For example to know if the job is on queue, running or suspended.

1.2.1.1. TGCC

You can use ccc_mstat on Irene. To see the available options and useful scripts, see Working on Irene?.

1.2.1.2. IDRIS

You can use llq on Ada. To see the available options and useful scripts, see Working on Ada.

1.2.2. run.card

When the simulation has started, the file run.card is created by libIGCM using the template run.card.init. run.card contains information of the current run period and the previous periods already finished. This file is updated at each run period by libIGCM. You can find here information of the time consumption of each period. The status of the job is set to OnQueue, Running, Completed or Fatal.

1.2.3. RunChecker

This tool provided with libIGCM allows you to find out your simulations' status.

1.2.3.1. Example of a RunChecker output for a successful simulation

1.2.3.2. General description

Below is a diagram of a long simulation that failed.

- In this block, you will find information about the simulation directories.

- Here is information about the main job; the information comes from the config.card and the run.card :

- The first line returns the job name and the date of the last time data saved to disk in the run.card.

- DateBegin - DateEnd : start and end dates of the simulation as defined in config.card.

- PeriodState : variable coming from the run.card giving the run's status :

- OnQueue, Waiting : the run is queued ;

- Running : the job is running ;

- Completed : the run was completed successfully ;

- Fatal : the run failed.

- Current Period : this variable from run.card shows which integration step (most often one month or one year) is being computed

- CumulPeriod : variable from run.card. Number of the period being computed

- Pending Rebuilds, Nb | From | To : number of files waiting to be "rebuild", date of the oldest and the latest files. Most of the configuration use parallel I/O and have not more rebuild steps.

- The third block contains the status of the latest post processing jobs, the Rebuilds, the Pack, the Monitoring and the Atlas. Only the computed periods are returned for the Monitoring and the Atlas. For the other processing jobs, the computed periods and the number of successfully transferred files are returned.

- Lastly, the current date.

1.2.3.3. Usage and options

The script can be started from any machine :

path/to/libIGCM/RunChecker.job [-u user] [-q] [-j n] [-s] [-p path] job_name

- -u user : starts the Checker for the simulation of another user

- -q : silence mode

- -j n : displays n post processing jobs (10 by default)

- -s : looks for a simulation $WORKDIR and adds it to its catalog of simulations before displaying the information

- -p path : !!!absolute!!! path of the directory containing the config.card instead of the job_name.

1.2.3.4. Use

This listing allows you to detect a few known errors :

- Job running but the date of the last time output was written to disk in the run.card is much older than the current date :

- Date is in red in the post processing listing : this means that errors during file transfers occurred in the post processing job.

- For a given post processing job, the number of successfully-transferred files varies according to the date : this might mean that errors occurred.

In some cases (such as for historical simulations where the COSP outputs are activated starting from 1979 ...) this behavior is normal!

- A PeriodState to Fatal indicates that an error occurred either in the main job or in one of the post processing jobs.

- If the number of rebuilds waiting is above...

1.2.3.5. Good things to know

During the first integration of a simulation using IPSLCM5, an additional rebuild file is transferred. This extra file is the NEMO "mesh_mask.nc" file. It is created and transferred only during the first step. It is then used for each "rebuild" of the NEMO output files to mask the variables.

1.3. End of simulation

Once your simulation is finished you will receive an email saying that the simulation was "Completed" or that it "Failed" and two files will be created in the working directory of your experiment:

- run.card

- Script_Output_JobName

A Debug/ directory is created if the simulation failed in a way that is correctly diagnosed by libIGCM. This directory contains diagnostic text files for each model component. It won't be created if the job reaches the time limit and is stopped by the batch scheduler.

If the crash is not properly handeld by ligIGCM, you will find a lot of files in $RUN_DIR. In Script_Output_JobName, find the line starting with IGCM_sys_Cd : and get the location of the RUN_DIR.

If the simulation was successfully completed output files will be stored in the following directory:

- $CCCSTORE/IGCM_OUT/TagName/[SpaceName]/[ExperimentName]/JobName at TGCC

- ergon:IGCM_OUT/TagName/[SpaceName]/[ExperimentName]/JobName at IDRIS

with the following subdirectories:

- RESTART = tar of the restart files for all model components and with the pack frequency

- DEBUG = tar of the debug text files for all model components

- ATM

- CPL

- ICE

- OCE

- SRF

- SBG

- Out = run log files

- Exe = executables used for the run

- ATM/Output, CPL/Output, etc... = NetCDF output of the model components

If SpaceName was set to TEST the output files will remain in the work directories

- $SCRATCHDIR/IGCM_OUT/TagName/[SpaceName]/[ExperimentName]/JobName at TGCC

- $WORKDIR/IGCM_OUT/TagName/[SpaceName]/[ExperimentName]/JobName at ada/IDRIS

1.4. Diagnostic tools : Checker

1.4.1. TimeSeries_Checker

The TimeSeries_Checker.job can be used in diagnostic mode to check if all time series have been successfully created. Read more further below #Startorrestartpostprocessingjobs1.

1.4.2. SE_Checker

See further below #SE_Checker.jobrecommendedmethod.

2. Analyzing the Job output : Script_Output

Reminder --> This file contains three parts:

- copying the input files

- running the model

- post processing

These three parts are defined as follows:

####################################### # ANOTHER GREAT SIMULATION # ####################################### 1st part (copying the input files) ####################################### # DIR BEFORE RUN EXECUTION # ####################################### 2nd part (running the model) ####################################### # DIR AFTER RUN EXECUTION # ####################################### 3rd part (post processing)

A few common bugs are listed below:

- if the file ends before the second part, possible reasons can be:

- you didn't delete the existing run.card file in case you wanted to overwrite the simulation;

- you didn't specify OnQueue in the run.card file in case you wanted to continue the simulation;

- one of the input files was missing (e.g. it doesn't exist, the machine has a problem,...);

- the frequencies (RebuildFrequency, PackFrequency ...) do not match PeriodLength.

- if the file ends in the middle of the second part, it's most likely because you didn't request enough memory or CPU time.

- if the file ends in the third part, it could be caused by:

- an error during the execution;

- a problem while copying the output;

- a problem when starting the post processing jobs.

If the following message is displayed in the second part of the file, it's because there was a problem during the execution:

======================================================================== EXECUTION of : mpirun -f ./run_file > out_run_file 2>&1 Return code of executable : 1 IGCM_debug_Exit : EXECUTABLE !!!!!!!!!!!!!!!!!!!!!!!!!! !! IGCM_debug_CallStack !! !------------------------! !------------------------! IGCM_sys_Cp : out_run_file xxxxxxxxxxxx_out_run_file_error ========================================================================

If the following message is displayed :

======================================================================== EXECUTION of : mpirun -f ./run_file > out_run_file 2>&1 ========================================================================

If there is a message indicating that the "restartphy.nc" file doesn't exist it means that the model simulation was completed but before the end date of your simulation. If this happens and if your model creates an output log other than the simulation output log, you must refer to this log. For example, the output file of the ocean model is stored on the file server under this name:

IGCM_sys_Put_Out : ocean.output xxxxxxxx/OCE/Debug/xxxxxxxx_ocean.output

For LMDZ your output log is the same as the simulation output log and it has not been copied to the storage space. If your simulation has been performed on $SCRATCHDIR (TGCC) you can retrieve it there. Otherwise, you must restart your simulation using $WORKDIR (IDRIS) as the working directory keeping all needed files. You must also change the RUN_DIR_PATH variable. See here before restarting it.

In general, if your simulation stops you can look for the keyword "IGCM_debug_CallStack" in this file. This keyword will come after a line explaining the error you are experiencing.

Example : --Debug1--> IGCM_comp_Update IGCM_debug_Exit : IGCM_comp_Update missing executable create_etat0_limit.e !!!!!!!!!!!!!!!!!!!!!!!!!! !! IGCM_debug_CallStack !! !------------------------!

3. Debug

3.1. Where does the problem come from ?

Your problem could come from a programming error. To find it you can use the text output of the model components located in the Debug subdirectories. Your problem could be caused by the computing environment. This problem is not always easy to identify. It is therefore important to perform benchmark simulations to learn about the usual behavior of a successfully completed simulation.

3.1.1. The Debug directory

If the simulation failed due to abnormal exit from the executable, a Debug/ directory is created in the working directory. It contains output text files of all model components for your configuration. You should read them to look for errors. For example :

- xxx_out_gcm.e_error --> lmdz text output

- xxx_out_orchidee --> orchidee text output

- xxx_ocean.output --> nemo text output

- xxx_inca.out --> inca text output

- xxx_run.def --> lmdz parameter files

- xxx_gcm.def --> lmdz parameter files

- xxx_traceur.def --> lmdz parameter files

- xxx_physiq.def --> lmdz parameter files

- xxx_orchidee.def --> orchidee parameter files

Your best friend is : grep -i error * ; grep -i 'e r r o r' *ocean.output

3.1.2. Programming error

Please, take the time to read and analyze modifications you have done in the code. Nobody codes perfectly.

3.1.3. Unknown error

In this case, it's possible to relaunch the main job to run again the last period.

If the simulation stopped before coming to the end due to an error, it is possible to relaunch the latest period after eventual modifications. The simulation will then read run.card to know where to start and the simulation will continue until the end (if the problem was solved).

To relaunch manually you first need to be sure that no files have been stored for the same period. In libIGCM there are 2 scripts that help you do this cleaning up :

- The error occurred before the packs have been created:

path/to/libIGCM/clean_PeriodLength.job

- The error occurred after the packs were created:

path/to/libIGCM/clean_latestPackperiod.job [CCYY] # CCYY = year up to which you are deleting everything (this year included). By default, it's the current year in run.card

3.2. On Irene : How to use the ddt debugger for the coupled model (or any other MPMD mode)

Read this page

3.3. Debug on Irene

Read this page

3.4. Start or restart post processing jobs

Please look at the next paragraph.

4. Start or restart post processing jobs

You can run post processing jobs once the main job is finished (for example if the post processing job was deactivated in config.card or if you encountered a bug).

You can :

- work directly in the experiment directory (which looks like PATH_MODIPSL/config/IPSLCM5A/ST11/). Possible option but not recommended.

- work in a dedicated directory located in the experiment directory (e.g. PATH_MODIPSL/config/IPSLCM5A/ST11/POST_REDO). Best choice.

- work in a dedicated directory which is independent of the experiment directory (e.g. $WORKDIR/POST_REDO).

For the last two options you must first:

- If your post processing directory is a subdirectory located in the experiment directory (e.g. PATH_MODIPSL/config/IPSLCM5A/ST11/POST_REDO):

- copy (or link ln -s) the files and directories config.card POST, COMP and run.card

cd $PATH_MODIPSL/config/IPSLCM5A/ST11 mkdir -p POST_REDO cd POST_REDO/ cp -pr ../COMP . cp -pr ../POST . cp -pr ../config.card . cp -pr ../run.card .

- copy (or link ln -s) the files and directories config.card POST, COMP and run.card

- If your post processing directory is an independent directory :

- create a dedicated directory (for all simulations) ;

- transfer libIGCM and run ins_job (for all simulations) ;

- create a directory for the simulation to analyze (i.e. for each simulation) :

Before submitting a post processing job at TGCC (rebuild_fromWorkdir.job, pack_debug.job, pack_output.job, pack_restart.job, monitoring.job, create_ts.job, create_se.job) you must make sure that the submission group in present in the job header (#MSUB -A genxxxx). If it isn't, add it.

4.1. Restart REBUILD

- Copy the libIGCM/rebuild_fromWorkdir.job file to the experiment directory or to the dedicated directory;

- Edit it:

StandAlone=true libIGCM= # Points to the libIGCM directory of the experiment PeriodDateBegin= # beginning date of the last serie to be "rebuilded" NbRebuildDir= # Number of directories in the series to be "rebuilded" # until the PeriodDateBegin REBUILD_DIR= # Path for the backup of files waiting to be reconstructed # (looking like $SCRATCHDIR/IGCM_OUT/.../JobName/REBUILD or $SCRATCHDIR/TagName/JobName/REBUILD for version older than libIGCM_v2.0 # if RebuildFromArchive=NONE) MASTER=${MASTER:=irene|jeanzay} # Select the computing machine : MASTER=irene for example

- Submit the job:

ccc_msub rebuild_fromWorkdir.job # TGCC sbatch rebuild_fromWorkdir.job # IDRIS

The rebuild job submits pack_output.job automatically.

4.2. Restart Pack_output

The pack_output (e.g. in case it was not submitted by the rebuild job):

- Copy the libIGCM/pack_output.job file to the experiment directory or to the dedicated directory;

- Edit it :

libIGCM=${libIGCM:=::modipsl::/libIGCM} # path of the libIGCM library MASTER=${MASTER:=irene|jeanzay} # machine on which you work DateBegin=${DateBegin:=20000101} # start date of the period to be packed DateEnd=${DateEnd:=20691231} # end date of the period to be packed PeriodPack=${PeriodPack:=10Y} # pack frequency

- Submit the job:

ccc_msub pack_output.job # TGCC sbatch pack_output.job # IDRIS

create_ts.job and create_se.job are submitted automatically.

4.3. Restart Pack_restart or Pack_debug

- Copy the libIGCM/pack_debug.job and libIGCM/pack_restart.job files to the experiment directory or to the dedicated directory;

- Edit them :

libIGCM=${libIGCM:=::modipsl::/libIGCM} # path of the libIGCM library MASTER=${MASTER:=irene|jeanzay} # machine on which you work DateBegin=${DateBegin:=20000101} # start date of the period to be packed DateEnd=${DateEnd:=20691231} # end date of the period to be packed PeriodPack=${PeriodPack:=10Y} # pack frequency

- Submit the two jobs:

ccc_msub pack_debug.job ; ccc_msub pack_restart.job # TGCC sbatch pack_debug.job ; sbatch pack_restart.job # IDRIS

4.4. Restart the Time series with TimeSeries_checker.job

In case you haven't done it yet, copy config.card COMP POST and eventually run.card (post process only part of the simulation) in the POST_REDO/ directory.

- Copy the libIGCM/TimeSeries_Checker.job file to the experiment directory or to the dedicated directory besides COMP and config.card as minimum

- Since tag libIGCM_v2.8 you do not need to modify the TimeSeries_Checker.job anymore. By default it takes the variables from config.card.

- Check the value of TimeSeriesCompleted in run.card. For a complete check set :

TimeSeriesCompleted=

- Run the TimeSeries_Checker.job in interactive mode. It will call the missing create_ts jobs :

or alternatively, in ksh :

./TimeSeries_Checker.job

./TimeSeries_Checker.job 2>&1 | tee TSC_OUT # Create log file grep Batch TSC_OUT # find all the submitted jobs

- Answer n to the following question to only see what is missing and answer y to submit all missing create_ts.job :

"Run for real (y/n)"

4.5. Restarting the seasonal mean calculations

Transfer config.card, COMP, POST, and run.card (post process part of the simulation only) in the POST_REDO/ directory if you have not done so yet.

There are two methods:

4.5.1. SE_Checker.job (recommended method)

- Copy the libIGCM/SE_Checker.job file to the experiment directory or to the dedicated directory

- Run SE_checker.job in interactive mode. This will call the create_se jobs:

or alternatively, in ksh :

./SE_Checker.job

# Create logfile: ./SE_Checker.job 2>&1 | tee SE_OUT # Find all started jobs : grep Batch SE_OUT

4.5.2. Restart create_se.job

- Copy the libIGCM/create_se.job file to the experiment directory or to the dedicated directory;

- Edit it:

StandAlone=true libIGCM= # path of the libIGCM library PeriodDateEnd= # end date of the decade to be processed

- Submit the job:

ccc_msub create_se.job # TGCC sbatch create_se.job # IDRIS

4.6. Restarting the atlas figures creation

Transfer config.card, COMP, POST, and run.card (post process part of the simulation only) in the POST_REDO/ directory if you have not done so yet.

4.6.1. create_se.job (recommended method)

- Copy the libIGCM/create_se.job file to the experiment directory or to the dedicated directory;

- Edit it and indicate the period (yyyymmdd) for which you need to redo the atlas. For example.

# DateBegin=19600101 # PeriodDateEnd=19691231

- Submit create_se.job in batch mode

5. Optimization with Lucia

IPSLCM coupled model runs three executables (atmospehre, ocean and IO server) that use three separate sets of computing cores. The number of cores attributed to each one should be choose such as the execution times of each executable are as close as possible, to reduce the waiting time.

LUCIA is a tool implemented in OASIS that measure execution and waiting times of each executable, and helps to tune the number of execution cores for each model.

5.1. LUCIA documentation

http://www.cerfacs.fr/oa4web/papers_oasis/lucia_documentation.pdf

5.2. Using LUCIA

First install and run a coupled model. Then performs some modifications.

5.2.1. Get a version of OASIS with LUCIA

cd modipsl : mv oasis-mct oasis-mct_orig cp -rf /ccc/cont003/home/igcmg/igcmg/Tools/oasis3-mct_lucia oasis3-mct cd config/IPSLCM6 ; gmake clean ; gmake

5.2.2. Update DRIVER/oasis.driver (example for Irene)

Index: oasis.driver

===================================================================

--- oasis.driver (revision 3545)

+++ oasis.driver (working copy)

@@ -117,6 +117,7 @@

# To be changed

# On Curie

# /ccc/scratch/cont003/dsm/p86caub/LUCIA/lucia

+ /ccc/cont003/home/igcmg/igcmg/Tools/irene/lucia/lucia

# On Ada

# /linkhome/rech/psl/rpsl035/LUCIA/lucia

fi

5.2.3. Update COMP/oasis.card

Index: oasis.card =================================================================== --- oasis.card (revision 3545) +++ oasis.card (working copy) @@ -4,7 +4,7 @@ [UserChoices] OutputMode=n FreqCoupling=5400 -Lucia=n +Lucia=y

5.2.4. Run the model

5.2.4.1. Script_Output will contains some additionnal information

Component - Computation - Waiting time (s) - done on tstep nb LMDZ 1385.77 ( +/- 6.69 ) 7.58 ( +/- 6.14 ) 362 oceanx 1319.37 ( +/- 18.68 ) 85.41 ( +/- 18.70 ) 362 xios.x 0.00 ( +/- 0.00 ) 0.00 ( +/- 0.00 ) 0 New analysis Component - Calculations - Waiting time (s) - done on tstep nb: LMDZ 1379.55 6.45 362 oceanx 1300.79 85.21 362 xios.x 0.00 0.00 0

5.2.5. LUCIA will also procuce a graphic that you will find in :

IGCM_OUT/${TagName}/${SpaceName}/${ExperimentName}/${JobName}/CPL/Debug/${JobName}_*******_oasis_balance.eps

Attachments (7)

- Prod-avec-libigcm.ppt (179.5 KB) - added by trac 11 years ago.

- Prod-avec-libigcm.jpg (77.8 KB) - added by trac 11 years ago.

- libigcm_prod.jpg (188.9 KB) - added by trac 11 years ago.

- libigcm_prod_rotate.jpg (191.5 KB) - added by trac 11 years ago.

- RunChecker-OK.jpg (204.5 KB) - added by ncarenton 10 years ago.

- RunChecker_extrait.jpg (542.9 KB) - added by ncarenton 10 years ago.

- hermes.jpg (226.7 KB) - added by mafoipsl 8 years ago.

Download all attachments as: .zip